In today's data-driven landscape, businesses need efficient, scalable, and diverse data storage mechanisms. The data lake plays a key role in modern data architectures. This article examines the concept of data lakes and underscores how AWS Lake Formation serves as a guide for effective implementation.

What are Data Lakes?

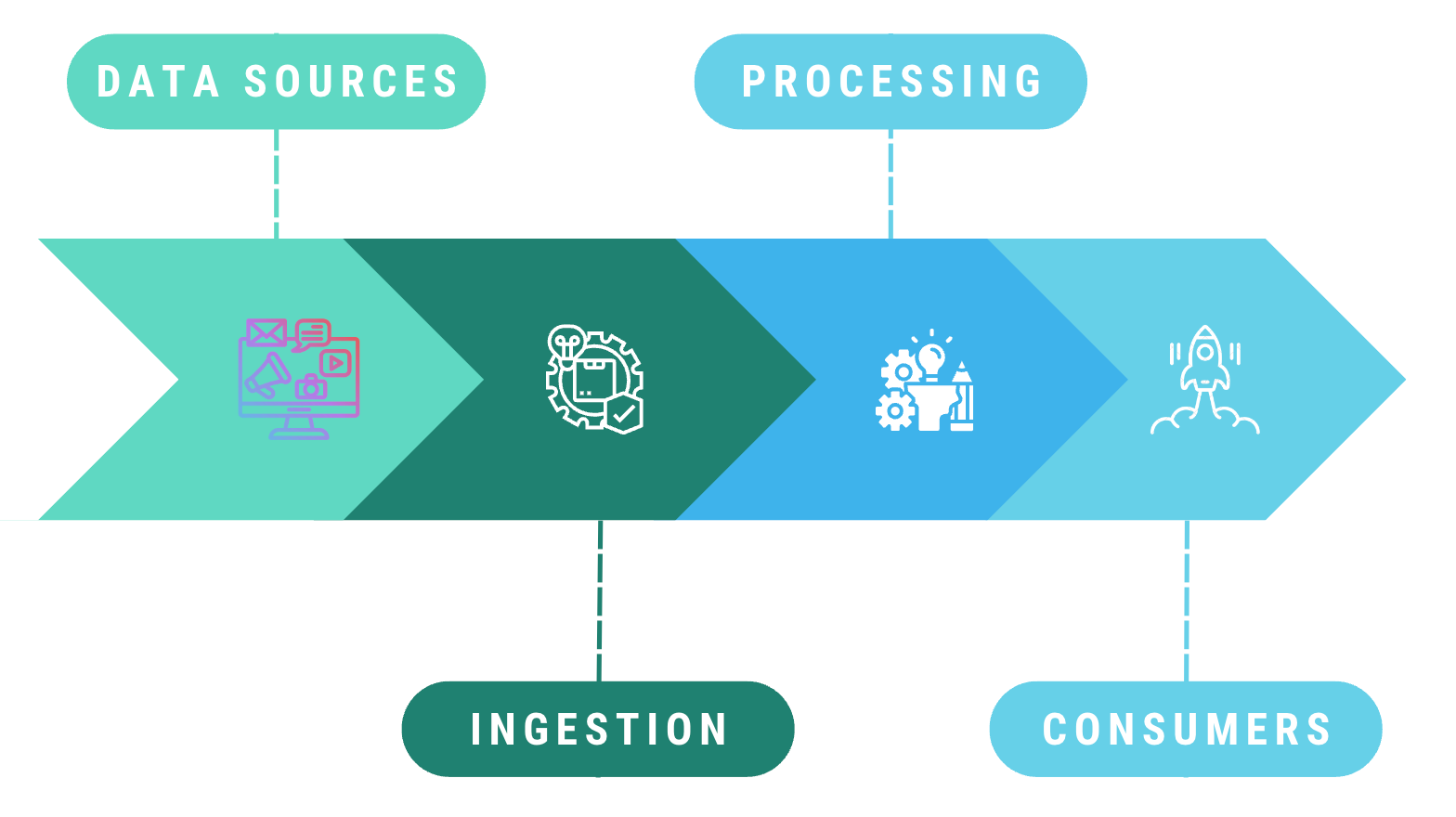

A data lake is a centralized storage system that can store large volumes of raw data in its native format until it's needed. This "native format" concept is pivotal. While traditional databases and data warehouses require structured data, data lakes are ambivalent to the structure and type:

- Structured Data: Think of relational databases with defined tables, rows, and columns.

- Semi-Structured Data: This includes formats like JSON, XML, and more, which might not fit neatly into tables but have some organization.

- Unstructured Data: Log files, videos, and images fall into this category.

One of the key philosophies underpinning data lakes is the "schema-on-read" versus the traditional "schema-on-write" approach. In a data warehouse, you define the structure (or schema) of your data before writing into the database. In contrast, a data lake allows data ingestion without an initially defined structure. It's when you read or process the data that you define its schema.

The Promise of Data Lakes

- Scalability: Traditional databases might struggle or become costly as data scales. Data lakes, especially when backed by cloud storages like Amazon S3, scale almost seamlessly with data.

- Flexibility: Data lakes can store diverse datasets—no need for different storage solutions for logs, images, and structured data.

- Advanced Analytics: With data of varied types in one place, advanced analytics, including machine learning models, becomes more accessible.

The Challenges of Data Lakes

Beyond their undeniable utility, data lakes come with their set of challenges:

Complexity in Management: The sheer volume and variety of data demand robust tools and practices for data management, ingestion, and cataloging. An improperly managed data lake can quickly become overwhelming, leading to inefficiencies.

Data Integrity and Quality: Not all data pouring into the lake is clean or valuable. Ensuring consistent data quality is important to derive insights and facilitate seamless operations.

Metadata Management: Understanding what data resides in the lake, its origins, its structure, and its relations is critical. Effective metadata management is necessary to avoid the unwanted "data swamp" scenario (a mismanaged data lake where stored data becomes inaccessible or unusable).

Security & Compliance: With large amounts of potentially sensitive data, establishing rigorous security measures and ensuring compliance with data privacy regulations becomes a significant concern.

Performance Concerns: Flexible schema-on-read approaches can sometimes mean that data isn't optimized for the specific analytical tasks, leading to performance bottlenecks.

AWS Lake Formation: addressing these challenges

AWS Lake Formation is purpose-built to tackle data lake challenges:

Efficient Data Management

Lake Formation's blueprints streamline the data ingestion process, accommodating diverse sources and formats. Automated workflows manage regular updates, and continuous monitoring ensures that the lake remains a viable asset for real-time analytics and long-term storage.

Data Integrity

AWS Lake Formation integrates closely with AWS Glue. This service automates time-consuming ETL (Extract, Transform, Load) tasks, ensuring data remains clean and in a usable format. By managing and converting data into analytically optimized formats, AWS Glue enhances performance while maintaining data quality.

Metadata Management

Lake Formation doesn't just ingest and store data; it organizes it. The automated cataloging feature assigns relevant metadata, making data discovery straightforward. By using a centralized data catalog, users can seamlessly find, access, and collaborate using the stored datasets.

Security & Compliance

Data security is essential in Lake Formation. The platform's permissions aren't just binary access controls; they're nuanced. Administrators can grant access at varying levels, from the entire database down to individual columns. Data encryption, both in-transit and at-rest, is standard, backed by the AWS Key Management Service.

Performance Optimization

Lake Formation emphasizes best practices in data management. Through data partitioning and selecting efficient storage formats, users experience fast data retrieval. Additionally, data transformation tools ensure optimal formats for analytics.

Technical Integration Insights

Lake Formation's prowess is amplified when integrated with other AWS services:

Data Formats & Compression

Storing data efficiently is a priority. Lake Formation encourages using data formats like Parquet or ORC, which, combined with data compression, optimize storage space and query performance. These practices save costs and enhance the responsiveness of analytics operations.

Data Partitioning

Partitioning isn't just a storage strategy; it's a performance enhancer. By dividing data, say by date or another relevant metric, users can retrieve specific datasets more quickly. This approach becomes especially beneficial when handling enormous datasets spanning terabytes or more.

Caching Mechanisms

AWS offers a range of caching tools to speed up data access. Redshift's result caching and Athena's query result caching dramatically improve query times. This ensures users and applications can access data with minimal latency, even during peak loads.

Conclusion

The decision to adopt a data lake strategy is a significant one. It presents a transformational approach to data management, enabling businesses to utilize diverse datasets for deeper insights and informed decision-making. But as we've discussed, the journey of constructing and maintaining an efficient data lake is not trivial. It requires a deep understanding of both the data landscape and the challenges it presents.

AWS Lake Formation stands out as a comprehensive tool to help businesses navigate these challenges. With its rich feature set, scalability, and integrations with the broader AWS ecosystem, it offers a streamlined path to create and manage data lakes effectively. Organizations can ensure data integrity, optimize for performance, maintain security, and foster an environment conducive to advanced analytics.

But even with the best tools, successful implementation and management demand expertise. Specialized in setting up data lakes and data processing infrastructures, Intenics supports small, medium and large business in managing their data effectively. We ensure that your data strategy aligns seamlessly with your business objectives.

In summary, while the idea of a data lake offers immense potential, realizing that potential requires a robust toolset and expertise.