Working with AWS Serverless introduces new considerations compared to traditional architectures. While network issues can still be an issue, there are unique challenges to be aware of in the cloud.

What are Retries?

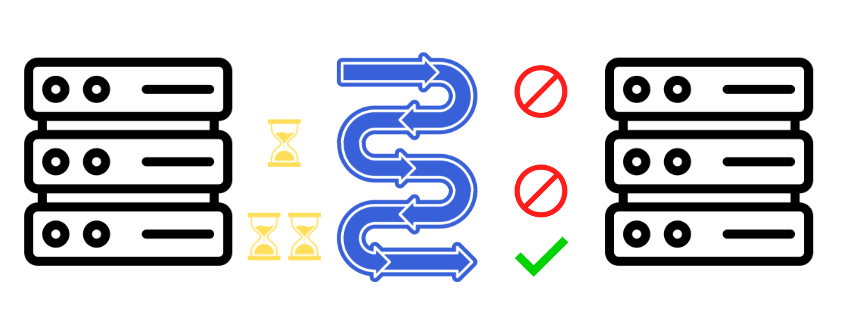

Retries involve reattempting operations after failures. For instance, if a service rate limits our request, we might wait a short amount of time and try again. With each subsequent failure, the wait time increases, enhancing system resilience by allowing operations to succeed in the face of temporary setbacks.

Whey do I need them?

Back in the days, when servers faced high traffic, they'd simply slow down until they are not responding anymore. However, in a cloud environment, and especially when going serverless, you must account for rate limiting imposed by managed services.

And retrying is you first tool to do so.

For Example, additionally, throttling exceptions can arise when a service needs more time to autoscale in response to the current load. A typical example of this is the delay associated with DynamoDB's on-demand scaling, which can take several minutes to double the R/W capacity.

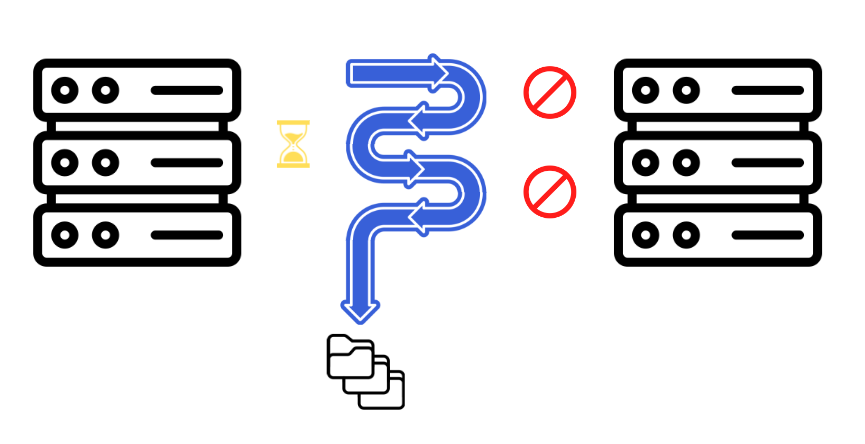

Once you reached the maximum retry count you might think about further measures, like dead letter queues or alike.

Defaults in AWS SDKs

It's worth noting that basic retry behavior is already integrated into AWS SDKs like boto3 or the AWS SDK for JavaScript. This can be both: a good thing and a pitfall. On one hand, it simplifies things for developers. On the other, new developers might not be aware of this aspect, leading to unexpected issues down the road.

Be aware, then accept or change

It's crucial to be proactive about retries. Understand your SDKs default settings and what happens if you exceed the maximum retry count. If you don't like the defaults you can change those to your needs. AWS SDKs v2 even allow customization of the retry function if we are not satisfied:

const customBackoff = (retryCount: number): number => {

const baseDelay = 1000;

return baseDelay * Math.pow(2, retryCount);

};

// Configure the SDK to use the custom backoff function

AWS.config.update({

maxRetries: 5,

retryDelayOptions: {

customBackoff: customBackoff

}

});For a deeper dive into the topic and even more sophisticated backoff functions, see this excellent AWS Blog Post

Conclusion

When adopting a serverless approach, always keep retries in mind. Whether you're sticking with the default SDK settings or opting for customization, being informed is key.