What Engineers Need To Know

Most engineers think in terms of AWS regions and availability zones. Regions have well-known boundaries, but they remain part of the same global AWS partition.

The "aws" in an ARN looks constant, so most engineers never question it.

But that field changes with the partition. Unless you work in US GovCloud or deploy workloads in China, you have only ever seen the main partition.

From the outside, partitions look like AWS main. Inside, they behave like entirely separate clouds. There is no shared identity, no shared network plane, and no native way to exchange data or governance structures. If you design cross region systems today, you should not assume any of those patterns transfer to cross partition work.

This article explains how partitions work, why they exist, and what you must consider when building workloads that interact across them.

Especially when you are used to serverless workloads.

This includes, but is not limited to

- Understanding AWS partitions

- Identity does not cross partitions

- ARN formats and APIs differ

- Networking ends at the partition boundary

- Governance cannot be shared

- Tooling must learn about partitions

- Data movement is never implicit

- Observability stays inside the partition

Understanding AWS partitions

AWS is not a single global environment. It is divided into partitions, each running its own control plane and carrying its own legal and operational boundaries.

Today we have the standard global partition, AWS GovCloud in the US, and AWS China operated under a separate governance model.

The upcoming AWS European Sovereign Cloud introduces another fully isolated world, operated from inside the EU with its own staffing, processes, and governance requirements.

The important part is not the geography. It is the isolation. A partition comes with its own IAM system, its own Organizations API, its own account namespace, and its own ARNs.

When you move from cross region design to cross partition design, you are no longer working within one cloud. You are building across two clouds that only share familiar service names.

This mindset shift is essential. Once you treat partitions as different clouds, the rest of the architectural decisions begin to make sense.

Identity does not cross partitions

IAM is fully contained within a partition. A role in the global partition is not visible to GovCloud. A user in GovCloud cannot be granted permissions in the global partition. There is no trust, no role assumption, and no shared federation layer across partition boundaries.

This has practical consequences. You cannot centralize permission management. You cannot apply the same Identity Center instance to multiple partitions. You cannot enforce SCPs globally. Every partition becomes its own identity island.

If you want a unified login experience, one option is to use an external identity provider that both partitions trust independently. Even then, you must maintain IAM configuration separately in every partition.

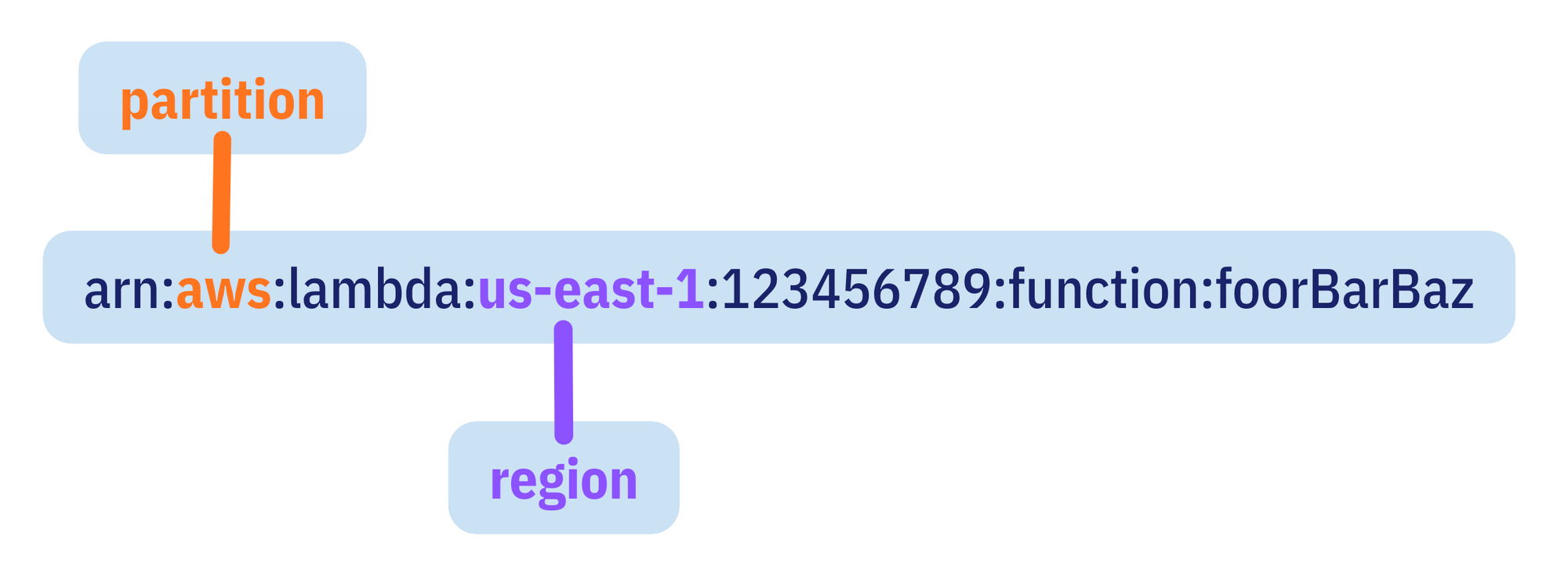

ARN formats and APIs differ

Partitions have different ARN prefixes to guarantee isolation. The global partition uses arn:aws:. GovCloud has its own prefix. China has its own. The European Sovereign Cloud will too

This difference alone breaks many internal tools. Anything that hard codes an ARN or constructs one with a fixed prefix will fail immediately when used outside the main partition.

- Infrastructure as code must become partition aware.

- Tooling must parameterize region, service endpoints, ARN patterns, DNS suffixes, and even login URLs. CDK, Terraform

- Internal libraries must all expose partition awareness as a first class concern.

Engineers must stop assuming that AWS means arn:aws:

aws AWS Commercial The standard public AWS cloud regions worldwide.

aws-cn AWS China Regions located in mainland China (e.g., Beijing, Ningxia).

aws-us-gov AWS GovCloud (US) Isolated for US government/compliance use.

aws-eu (prediction) maybe the new partition key for the ESC

Networking ends at the partition boundary

Inside a partition, AWS offers VPC peering, Transit Gateway, PrivateLink (and more). None of those features cross partitions.

There is no global private network between partitions. No peering. No shared Transit Gateway. No internal backbone that allows two partitions to talk privately.

Traffic between partitions is equivalent to traffic between two unrelated clouds.

Authentication, encryption, retries, and failure handling must all be designed explicitly. You cannot rely on the convenience of AWS internal networking.

This limitation shapes your architecture. APIs must be public or carefully exposed. Latency will be more of a concern. Network failures need more attention. Systems that previously used private AWS connectivity must now switch to internet grade patterns.

Governance cannot be shared

Landing zones, Organizations setup, CloudTrail baselines, Config rules, GuardDuty, Security Hub, and centralized logging must all be provisioned separately for each partition.

From a platform engineering perspective, this means building a full management plane for every partition in which you operate. You need separate management accounts, separate audit accounts, separate security tooling, and separate pipelines to maintain them.

Automation becomes essential. Without it, duplicating and maintaining parallel governance structures becomes fragile and error prone.

Tooling must learn about partitions

Most internal deployment pipelines and tooling assume the global partition. That assumption stops working the moment a company adds a second partition.

A partition aware toolchain must at least support:

- multiple credential sets

- separate artifact registries

- S3 buckets that do not cross partitions

- partition specific region sets

- differences in service availability

- partition specific IAM behavior

- duplication of ECR images, if used

- isolated Terraform states, if used

Every piece of automation must know which partition it operates in, or failures will be subtle and constant.

This is often the hardest organizational challenge, because it requires rethinking existing build systems, environment setup, and infrastructure libraries. But it is also the foundation for future scalability.

Data movement is never implicit

Because partitions are isolated, there is no shared S3, no shared KMS, no shared EventBridge, and no shared SNS or SQS. Moving data between partitions is equivalent to moving data between clouds. Every transfer must be explicit.

You will rely on public endpoints (or even private leased lines). You may design authentication and encryption at the application layer. You will need versioned APIs or well defined export pipelines.

Observability stays inside the partition

CloudWatch, X Ray, CloudTrail, and CloudWatch Logs do not cross partitions. You cannot query or aggregate them natively. Every metric, every alarm, every trace lives entirely inside its own partition unless you export it.

You must decide whether to:

- push all logs and metrics to a common third party store (e.g. one of the partitions), or

- keep each partition independent and aggregate dashboards at a higher level

Either option is valid, but neither comes for free. On-call teams must know which partition emitted an alarm, and runbooks must treat partitions as independent operational domains.

Final thoughts

Working across AWS partitions requires a shift in thinking. Partitions behave like different clouds that share similar APIs but none of the underlying identity, governance, or network layers.

Engineers who prepare for this model will design systems that treat partitions as first class boundaries. They will parameterize their tools, duplicate their governance structures correctly, build strong API based integrations, and avoid coupling workloads to assumptions that only hold inside one partition.

Partition awareness is becoming a core cloud engineering skill. Now is the right time to prepare your teams for it.